22.3 User Privacy

Today's technology products can track and collect details about users better than ever before. This is great news for products that attempt to reach specific users or predict their users' needs and wants, but this advanced tracking comes at a price—including a lack of user trust, government intervention, and the added responsibility to protect all that data.

In this checkpoint, you'll dig into what privacy means for the products you'll be developing as a PM. You'll look at legal and social issues in technology that impact product decisions, and learn about best practices and the actions you can take to be privacy focused. All of this will make you a better, well-rounded product manager.

By the end of this checkpoint, you should be able to do the following:

- Explain how privacy concerns can affect your product decisions

What is privacy?

One of the definitions of privacy at Dictionary.com is the "freedom from damaging publicity, public scrutiny, secret surveillance, or unauthorized disclosure of one's personal data or information, as by a government, corporation, or individual." In other words, your users should have the ability to keep their personal information to themselves and choose who has access to certain information about them. But how does that apply to the kinds of products that you'll be responsible for?

Digital apps collect all kinds of information about people, including the sites they visit, content they enter on those sites, and people they interact with. Some of this information can be sensitive. For example, imagine that you're searching for a new job. Although your current employer might not regularly review your browser history on your work computer, they probably still have access to this information. As an extra precaution, you'd probably use your personal computer to search for jobs to avoid revealing those private details about your job hunt to your employer. Or what if every person who viewed your information on social media could have access to details such as your home address? There are many good reasons you might want to avoid that. Everyone has information that they prefer to keep to themselves or selectively share only with certain people.

When you think about how tech products work, privacy gets even more complex. Analytics information gets shared with third parties like Google Analytics, meaning they pose potential risks to user privacy. Your users' data can only be kept private if you have good security practices, as discussed in the previous checkpoint. There are also many legal issues involved with user privacy. As a PM, you need to be aware of all of this as you create new products and features.

Social issues in privacy

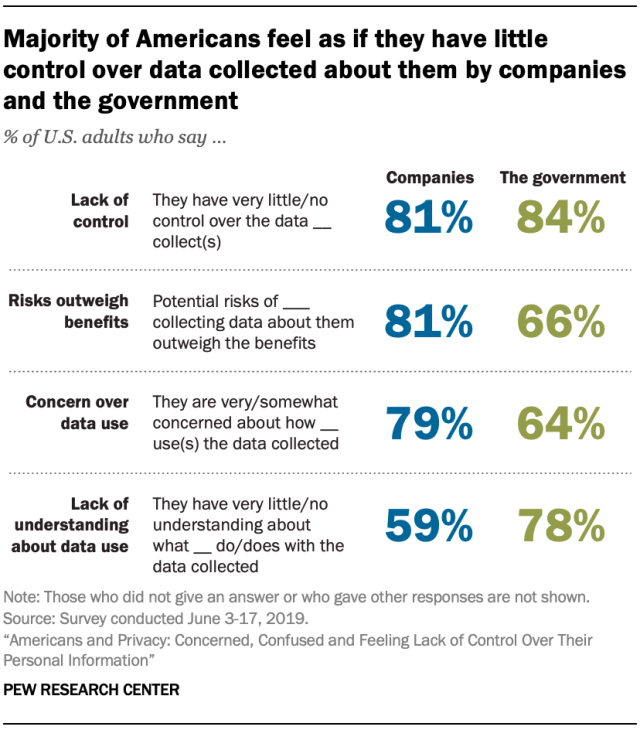

Privacy is becoming a bigger issue in people's minds. A recent Pew Research study found that 80% of people feel they have little to no control over who has access to their information, are concerned about how their data is being used, and think the risks outweigh the benefits of collecting that information. Even worse, people don't understand how their data is being used after it is collected.

Why are people so worried about privacy? It's a combination of problems. First, many companies have had notable data leaks, and users are concerned about having their private data exposed. For example, AdultFriendFinder was a network of sites targeted to people who were looking for sexual liaisons or affairs. In 2016, it was hacked, nearly every password was cracked, and user details were leaked. Imagine if you were a user of that site who expected your information to be kept private, and suddenly everyone knew that you were using it. And you've probably heard about people whose phones have been hacked to reveal sensitive text messages or photos, like Amazon CEO Jeff Bezos. As these security breaches become common, sites like Have I Been Pwned are created, which let users enter their email address to see if it was involved in any leaks. Go check it out. Are you certain that your private information is secure?

Another big concern around privacy involves the collection of data about people and their online activities. You can see this in two ways. First, your activity can "follow" you online. For example, you might be looking at a lawn mower on Home Depot's site, and then on the next website you visit you see an advertisement suggesting that you buy a lawn mower on Amazon. It's creepy, right? Second, these massive amounts of data—such as age, gender, location, and even sensitive information like sexual preferences or religion—can be used to build an assumed profile of a person. If you don't think that's a big deal, keep in mind that there are countries and groups who persecute citizens for their sexual preferences, religion, or other private information. The fact that such information can now be surmised through digital data collection is deeply disturbing.

These concerns mean that companies now have an even greater responsibility to their users to explain what data is being collected, how it's being protected, and how it's being used to make their products better. And for governments, it means creating new legislation to ensure that users' privacy is protected.

Legal issues in privacy

As you learned in previous checkpoints and modules, data from your product gets collected and distributed to many places. For example, it might go into databases hosted by Amazon, analytics tools hosted by Google, marketing and sales tools that track who is responding to your content, and advertising tools to target potential users.

After so many data breaches of both companies and the third-party companies they've shared user data with, many governments have now stepped in to legislate requirements for protecting user privacy. These laws have forced companies to scramble to update their products, privacy policies, and third-party integrations to comply with new laws. As it's very likely that there will be more privacy laws and restrictions on the use of private data in the future, it's worth understanding how user privacy laws work.

Crucial privacy laws

The two pieces of legislation that are most important to understand are the GDPR and the CCPA. The GDPR (General Data Protection Regulation) is the privacy law governing data collection and use for companies located in the EU and companies whose users are in the EU. The CCPA (California Consumer Privacy Act) works in a similar way for businesses and their users located in California. Since tech products are accessible to nearly everyone in the world, that means nearly every tech company must adhere to both these laws or face harsh penalties for noncompliance. You'll learn how these laws interpret privacy in a moment.

There are also other privacy laws that apply in specific cases. If you collect health information, then HIPAA (the Health Insurance Portability and Accountability Act of 1996) has strict guidelines for how that health information can be stored, who can access it, and who it can be shared with. If your product targets or knowingly has users that are children, COPPA (the Children's Online Privacy Protection Act of 1998) has special requirements for how you can collect and share that information, including things like getting parental consent. If you store payment information like credit cards, PCI (Payment Card Industry) compliance has a huge list of standards that you must adhere to.

These laws come with harsh penalties if you fail to comply or if data leaks from your system—even if you have put reasonable protections in place. That might sound scary, but thankfully there are many best practices and tools that can help manage your users' data privacy.

What's considered private information?

Below is a list of many different kinds of information that has been deemed private in various laws. The list is not complete or exhaustive because many laws are written with vagueness about what is or isn't covered. Also, what's considered private varies based on your company's and your users' state and country. Always consult with a lawyer if you're unsure about a specific piece of information falling under legal protection. Your future employer will most likely have in-house counsel or use a certain lawyer that you can talk to.

If your product asks for, stores, generates, sends to third parties, or implicitly generates any of the following information, you'll need to follow the appropriate guidelines:

- Any information that can be used to identify an individual such as their name, address, aliases, email address, Social Security number, driver's license number, passport number, etc.

- Information about protected status like gender, sexual orientation, ethnicity, or veteran status.

- Commercial records like products purchased, property records, or even items browsed or considered.

- Biometric information like fingerprints or face scans.

- Internet or electronic device activity like sites browsed, apps used, and engagement within those.

- Information about health, including current or past conditions.

- Geolocation data.

- Information about current or past employment.

- Niche information like library checkouts or video rental history (this information is protected by specific laws covering that data).

- Inferred information about any of the above, like guessing your age from your browsing activity, attitudes, preferences, and other such information.

What are your obligations under these privacy laws?

If your product handles any of the data described above, then you're subject to laws that dictate how to store, share, and disclose any data deemed private. In general, these laws require you to take the following steps:

- Get affirmative consent from users before you collect and track their private data, including browsing activity.

- Disclose all third parties that receive, store, or process data from your company.

- Collect any tracking data anonymously.

- Notify users in case of any data breaches or security issues that might have revealed their private information.

- Ensure that any companies who receive your data adhere to these laws as well.

- Allow users to request that your company send them all the data you've collected about them.

- Allow users to request that their accounts and associated data be completely deleted (this is known as the right to be forgotten).

- Implement reasonable protection, access control, and other security measures to protect private data.

- Appoint a data protection officer who ensures compliance with all of the above measures.

- Develop harsh penalties for products and personnel that don't adhere to any of the above measures.

One of the hidden aspects of following these laws is that your product is not the only thing creating and storing private data. If you use Slack and your coworkers share a screenshot of a user's profile in Slack, then Slack is also a privacy concern. If your marketing team implements a new email system, that system is storing private info like email addresses and email views—and it's covered by these privacy laws! Imagine that your marketing team asks you to integrate that email tool's tracking into the website you manage. Now the privacy of their email tool is your problem, too.

Tracking consent

The GDPR requires that you obtain explicit consent from users before you can track them. You might have seen these messages pop up asking you to accept cookies or telling you that you're being tracked. This fulfills two requirements of the GDPR— disclosing that you're being tracked and giving you an opportunity to opt-in to tracking. You can see a demo of one here (remember to turn off your ad blocker if you can't see the demo).

Keep in mind that users who don't consent to tracking (or who use ad blockers) will not be tracked by any of your analytics, advertising, or marketing tools. This can lead to gaps in your analytics data, like Google Analytics not seeing a conversion because tracking was disabled for that user. This is unavoidable, so you need to be aware that it can happen and educate others about why they're seeing gaps in the data your product is collecting.

Data localization

Besides the EU and California laws, many other countries have laws that dictate the way that you can collect and store data. For example, many countries have data localization laws where any data generated within a country or about that country's citizens must be stored in that country. China and Russia in particular have such laws requiring that all personal data be stored in that country, and businesses must turn over encryption keys and data access to those governments on demand. If your company is thinking of expanding into new countries, you should investigate whether or not they have data localization laws and consider how that will impact your product, users, and infrastructure.

Privacy in product development

Privacy is something that should always be on your mind during the product development process. Here are some best practices you can follow to make sure you give privacy serious consideration.

Speak to a lawyer

If you're ever worried about your requirements for handling privacy or user data, talk with your lawyer. Larger companies often have privacy teams who can review your issues and help you make the best decision for handling privacy issues. Don't make assumptions about privacy; speak with someone who knows the law.

Be familiar with the laws and policies

Make sure you follow all the applicable parts of any laws in any countries that your product operates in. You also need to think about policies that are specific to platforms that you run on. For example, Apple and Android have policies and best practices for handling user privacy on their platforms. You don't need to memorize these laws and policies, but you should be familiar enough with them to know when you need to dig into them in detail.

Use tools to manage privacy

Imagine that your product uses 15 different tools for analytics, sales, marketing, and other methods of tracking your users, and you suddenly get a request to have a user's account deleted. You could go into all 15 tools to delete the information, or you could use a data management tool like Segment that can manage your data for you. Such data synchronization platforms come with tools to help you manage your GDPR, CCPA, and other privacy requirements. It's much easier to buy a solution to this problem than it is to build or manage it yourself.

Collect only what you need

You'll be pushed by other teams to collect as much data as you can about your users, such as their location, job title, birthday, and more. But the more data you collect, the worse the impact of a data breach will be. As a best practice, only collect data you need, not data you want. You'll often learn that the data you want has little to no impact on your product, so you're better off collecting as little data as possible.

Have a privacy strategy

In general, you can take two approaches to privacy in your product. You can maximize privacy by following the strictest privacy rules that apply to your product. For example, you can always ask for consent before tracking a user, even though it's not required in many countries. You can also segment your privacy rules by using the loosest rules that apply to a user in a certain country. For example, you can handle California users differently than all other US users. This is a big decision with many technical and legal consequences, so work carefully with others in your company to make the best decision.

Practice ✍️

Consider the following scenarios. What would you do? Write up a short answer for both examples:

- The CEO of your B2B business asks you to scrape LinkedIn for contacts so that the sales team can email them about your product offerings.

- Your head of sales doesn't like the GDPR banner that's on your website because he thinks it's scaring people away. He asks you to take it down.